Discover The AI

Find over 300+ leading AI tools.

We are dedicated to featuring only the best AI Tools for each Task.

Featured 🔥

PPSPY

PPSPY is a robust Shopify store spy tool focused on dropshipping, employing AI technology to offer features like sales tracking, product research, and Shopify theme

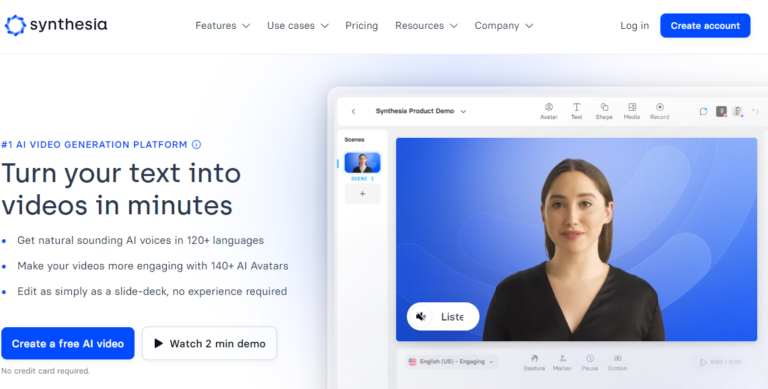

Vizard

Vizard is an AI-powered video editor and screen recorder that simplifies the creation of professional-looking recordings, particularly for webinars, testimonials, and conferences. It automatically segments

Beacons

Beacons is a free platform designed for social media creators, especially on Instagram and TikTok. It offers a ‘link in bio’ feature to consolidate links,

Muscify

Musicfy is an AI-powered tool that enables users to quickly create their own covers of popular songs by artists such as Ariana Grande, Eminem, and

Rytr

Rytr, with over 7 million users, is an AI writing assistant designed for quick and cost-effective content creation. Offering 40+ templates for blogs, emails, and

Speak.AI

Speak Ai is an AI tool for marketing and research teams, transforming unstructured audio, video, and text into actionable insights. Its suite includes automated transcription,

WriteSonic

Writesonic is an AI-powered writing software Powered by GPT, With WriteSonic, you can create articles, blog posts, landing pages, Google and Facebook ad copy, emails,

iMyFone VoxBox

iMyFone VoxBox is a powerful AI voice generator and voice cloning tool that allows users to create realistic and expressive voiceovers using text-to-speech technology. It

Create an Account And Make A Tool list!

Sign Up to our website, and start saving as many tools as you’d like for later uses!

All Tools 🛠️

LearnMentalModel

LearnMentalModels is a website that provides resources and tools for improving thinking skills and decision-making using mental models. It offers an AI Coach that helps users navigate

Mindsera

Mindsera is an AI-powered journaling tool that provides personalized mentorship and feedback to enhance mindset, cognitive skills, mental health, and fitness. It features AI-generated artworks, mindset analysis,

Upheal

Upheal is an AI-powered progress notes tool tailored for mental health professionals, offering automated transcription of therapy notes, video calling, and analytics features. It streamlines note-taking by

Replika

Replika is an AI bot designed for companionship and support, employing machine learning to generate personalized responses for users seeking a positive and non-judgmental environment. Inspired by

Rosebud

Rosebud is an AI-enhanced journaling tool utilizing OpenAI’s GPT-3 model to offer personalized prompts based on user entries, facilitating self-discovery through the Rose Bud Thorn framework. Users

Travopo

Travopo is a comprehensive trip planning tool offering practical travel tools and detailed guides to assist users in planning their journeys. The platform provides inspiration, helps find

Roam around

Roam Around is an AI-powered tool utilizing GPT-3 technology to help users create personalized travel itineraries based on their preferences and travel history. Users input their travel

TripPlanner.AI

Revolutionize travel itineraries with Trip Planner AI, the ultimate tool for modern travelers. Its advanced AI trip and travel planner capabilities ensure seamless itinerary planning. Users can

Casetext

Casetext is a legal AI company with over a decade of experience, offering CoCounsel, the world’s first reliable AI legal assistant. CoCounsel assists lawyers in tasks such

IAsk.AI

iAsk.AI is a free AI search engine utilizing NLP technology and a large-scale Transformer language-based model to provide accurate answers to user queries. Unlike other tools, it

Phind

Phind is an AI search engine tailored for developers, offering customizable searches and a default setting for user convenience. Developed by Hello Cognition, Inc., it aids users

Semanticscholar

Semantic Scholar is an AI-driven research tool, offering free access to a vast repository of scientific literature across various fields. With over 211 million papers archived, it

You

You.com is an AI-powered search engine prioritizing a personalized and privacy-focused user experience. Driven by artificial intelligence, it features a multi-dimensional interface with horizontal and vertical scrolling

Perplexity AI

Perplexity AI is an advanced answer engine designed to offer precise answers to intricate questions by leveraging large language models and search engines. It excels in comprehending

Rezi.AI

Rezi AI Resume Editor is an advanced resume builder utilizing the GPT-3 API from OpenAI to assist job seekers in crafting effective resumes optimized for applicant tracking

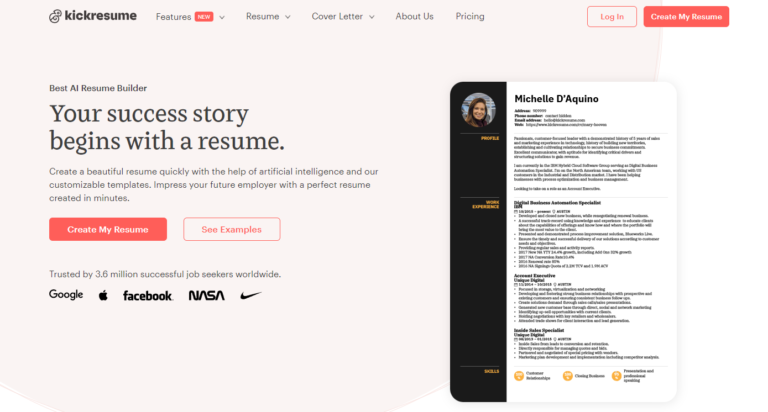

Kickresume

Kickresume is a popular online resume and cover letter builder utilized by over 2 million job seekers globally. It offers professional templates approved by recruiters, along with

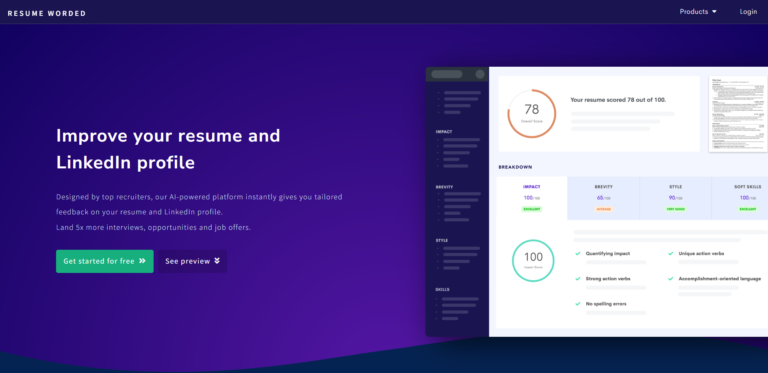

ResumeWorded

Resume Worded is an AI-powered platform that provides instant, tailored feedback on resumes and LinkedIn profiles to enhance job seekers’ chances of securing interviews and opportunities. It

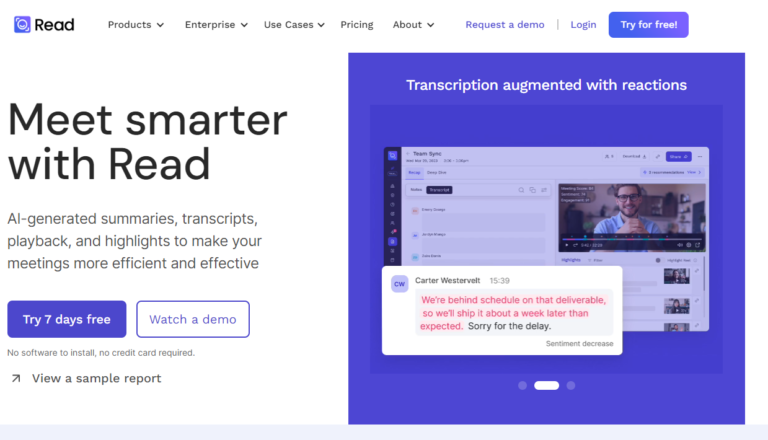

Read.AI

Read is a platform that improves meeting wellness in a hybrid world by offering better scheduling, real-time analytics, meeting summaries, transcription, video playback, automated recommendations, and more.

Discover The AI is one of the Leading AI tool websites, with a simple goal to make AI easier to find and accessible for everyone. You can use our site to easily discover new AI tools. AI is evolving every day and the time to start using it is now! With over 40 Different types of AI Tasks, You’re sure to find something that can benefit you or your team!

Nowadays there are new AI tools and services launching every day, it’s a difficult task to keep track of them all. We carefully select every tool that is listed, ensuring their quality and efficiency, to give the users the best tools for each of our Tasks.

We hope that Discover The AI helps you to find the best AI tools faster and easier, and saves you from hours of searching through sponsored search results!